The content strategy of civil discourse, part 3

In part two, we talked about how we got to a point where uncivil discourse is incentivized, and I and suggested that there may be a better way. But part of that better way requires us to explore the role design plays in that discourse.

Back in 2009, Derek Powazek (who literally wrote the book on online community) gave a fantastic talk called “Design for the Wisdom of Crowds” in which he laid out the notion that how we design systems can influence the conversation that happens within them. As an example, he pointed out how Amazon evolved the way they presented reviews.

In the early days, Amazon would put the most recent review first. This seemed logical enough until you realize that people assume that what’s at the top of the page is the most authoritative review. Therefore, if the most recent review was positive, people would assume the product overall was positive, and if the most recent review was negative, the product overall was negative. (This demonstrates a cognitive bias called “primacy”, which designers need to be hyperaware of when presenting content.)

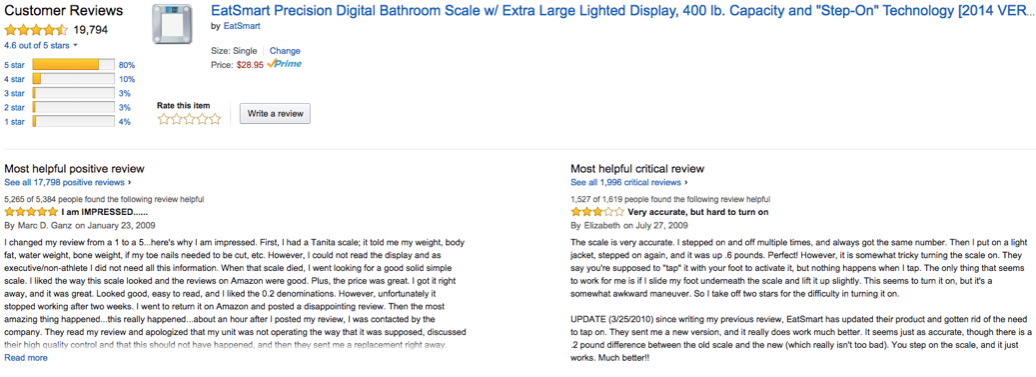

So, Amazon created a category called “most helpful” and allowed users to rate the usefulness of individual reviews and the one with the highest ranking would bubble to the top. But, again, whether or not it was positive or negative would have an outsized influence on the perceived opinion of the product.

Finally, Amazon evolved to show the most helpful positive and negative reviews posted side by side. This forced the user, visually, to give equal weight to the positive and negative reviews rather than just make a blanket assumption based on visual hierarchy.

Similarly, Amazon evolved to show not just the average star rating, which could be misleading, to showing a histogram which could provide much needed context. There’s a difference between a product that gets three stars because a most people gave it three stars and a product that gets three stars because a lot of people gave it five stars and a lot of people gave it one star.

Even color can influence the state of mind of a participant in a conversation. There’s an experiment in which different cognitive tasks were presented on computer screens with either a red, blue, or white background. Red backgrounds enhanced performance on detail-specific tasks, such as proofreading (a 31% gain over the same task with a blue background). Blue backgrounds enhanced performance on more creative tasks (twice as many ideas from a brainstorming task).

Red, generally, puts us into a fight or flight state. We become hyperfocused on the details because one of them could save our lives. Blue puts us in a more thoughtful state. Now, if you wanted to produce a website where people had to talk calmly about the issues, what color would you choose?

None of this should come as much of a shock. Setting dictates behavior in real life. If you were to walk into a restaurant with soft music, beautiful surroundings, and pristine dishes, you wouldn’t assume this is a place where it’s appropriate to drop the F-bomb as loudly as possible.

On the other hand, if you walked into a dingy bar with loud music playing you might assume dropping the F-bomb loudly was actually encouraged.

Now look at this…

The cool coloring and pristine typography tell you “we put a lot of thought and effort into how this place looks so you should put a lot of thought and effort into how you behave here.”

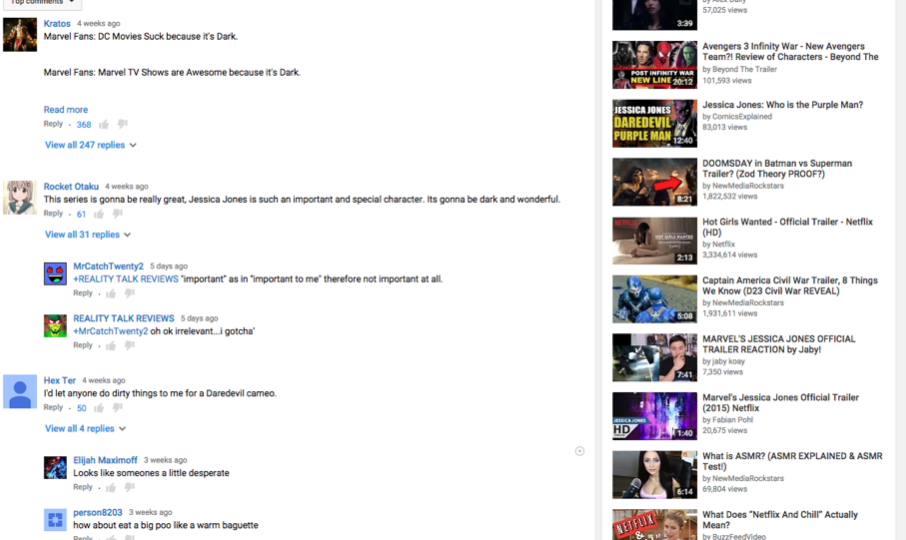

Now look at this…

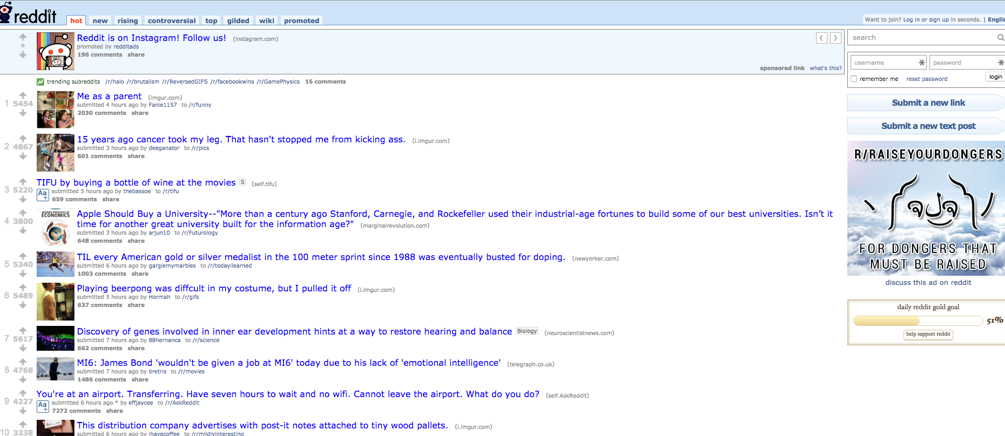

And this…

These interfaces send the opposite message. “We put the least amount of effort possible into how this place looks so you should put the least amount of effort into how you conduct yourself.”

Another myth to consider is the idea that anonymity is a clear factor in the quality of discourse. While someone might feel emboldened by how they’re not being perceived while posting, even when they use their own names, very bad things happen. In 2015, Monica Lewinsky gave a TED talk. Many users, using their very own names, posted hateful things in response, to the point where an entire blog post had to be written in response.

So, if it’s not about anonymity, what is it about? Well, for one thing, it’s about setting the standard. Community guidelines should be clear. Not just so you can communicate to your audience what will and won’t be tolerated, but also so you can align internally on what you stand for. Additionally, the peer groups of hate speech mongers can have an influence on what they will or won’t post. This experiment showed that when a user thinks someone with more followers than them from their peer group disapproves, they will—at least temporarily—shut up. (Full disclosure: I’m quoted in that article.)

In our next installment, we’ll look at the larger picture around how we try to make people behave online, and learn that calling out bad behavior, while important, will only get us about half the way there.

Did you know Think Company offers this series as a talk for teams, events, and conferences? If you’re interested in learning more, get in touch with us via our contact form.