How to integrate frequent design research into your agile process

In the course of long-term, Agile product design projects, it’s common for the team to conduct design research once at the very beginning of the project (if at all). Product teams don’t always see the value in regularly-conducted research, so the user perspective can become lost as work progresses. This lack of discovery or testing can leave designers, developers, and leaders scratching their heads, wondering why they’re spending time on certain features and initiatives.

You may be asking yourself the same questions over and over again:

- How can we get designs in front of stakeholders sooner?

- How can we make better use of our testing time to make sure we are building the right thing?

- How can we give the team insight into the “why” of what we are building, and confidence that it is the right thing to build?

Finding the right research cadence for your project will usually help answer these questions. Regardless of the type of project your team is working on, there is a way to figure out what that cadence should be and how you can fit design research into your timeline. I’ll dive into an approach we took on a recent Agile product design effort and share the key steps for helping your project benefit from regular research.

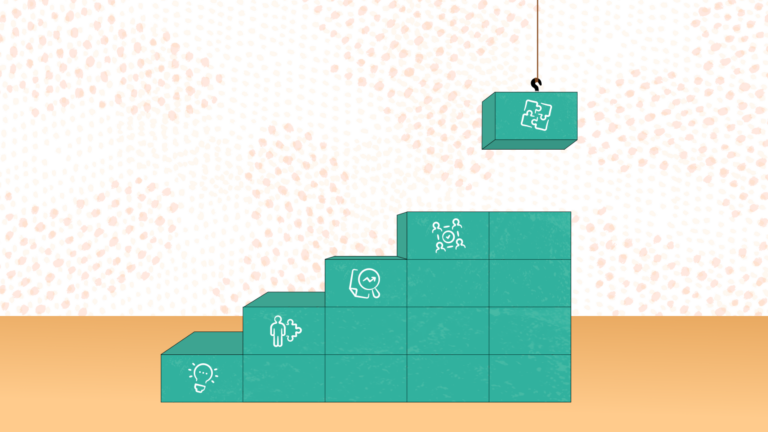

At a high level, finding the right design research structure requires four key principles:

- Create a dedicated research track with one or more researchers owning the initiatives

- Execute research-informed design iteration—splitting research time into smaller discovery, validation, and testing cycles to regularly inform design iteration

- Continue testing the product, especially after features are released

- Get on a cyclical research schedule so that team members come to expect when and how research will happen

Create a Dedicated Research Track

First, assign a dedicated researcher or research team. This person or group’s sole responsibility is to plan, execute, and analyze research data in order to form recommendations for feature development. They’ll need consistent involvement in internal design sessions as well as meetings that happen with outside stakeholders.

These sessions are important opportunities for researchers to:

- Regularly identify and capture new questions for research. For example, they may want to know how customers use the information provided within a certain feature, or where users are getting stuck in a certain workflow, and why. We use Miro at Think Company to collect questions alongside images of designs or sketches.

- Represent the customer by sharing the customer feedback at critical checkpoints with stakeholders, product owners, and development teams.

These researchers should build and maintain a pool of participants that they can continually access for quick research efforts. These participants should be willing and open to providing feedback regularly during the discovery and experimentation phases.

We like to run intercept tools like Hotjar on the existing platform to gather participants. We also run email-based screeners using Google Forms to identify interested customers and collect demographic and user data about participants—all so that we are aware of how representative our participant pool is of the overall customer base. We continually gauge interest in and value from our participants during our regular sessions. This helps us keep our participant pool fresh and full of customers who are bringing value to our research efforts.

In addition to the recurring activities above, researchers should spend time understanding the system and its users. I’ll call this “light discovery.” This light discovery should run for about 2 to 3 weeks, and the goal is to identify new opportunities that the team can ideate around, create concepts for, and test.

Suggested activities may include:

- Review of analytics to understand macro usage trends, like page counts, time on page, drop off points, and common search terms. Use Hotjar and Google Analytics to investigate this data.

- Observation of customer usage through in-depth interviews, contextual inquiry, or usability testing of the existing platform to understand context and identify key pain points and workarounds

- Understanding of the business side of the product by conducting in-depth conversations with stakeholders to understand the goals, product, and team history

- Conducting card sorting exercises to understand how customers and stakeholders group like content. Use OptimalSort for this exercise if you are unable to do this in person.

These activities can happen alongside system audit activities and design conceptualization. The goal is to get just enough information to start making workflow, IA, or layout concepts. And then, as soon as you have an idea, test it.

Execute Research-Informed Design Iteration

This brings us to the next important principle: test often to determine whether the team is designing the right thing. The goal here is to try out a number of evidenced-based ideas and test them. Start at the lowest-fidelity item, like testing a new content model, and then work your way up to testing things like:

- Sketches and blocky wireframe concepts

- Higher-fidelity wireframes

- Fully-designed prototypes

After each round of testing, use what you learned to iterate, improve, and increase the fidelity of the design. Think of it as another review input; in addition to bringing concepts to client stakeholders for demo and approval, you’re also bringing them to potential customers.

Keep the reporting of findings light so that researchers can focus on socializing the findings with the larger team instead of creating dense report presentations. This is where it is critical that the researcher(s) stay involved in design sessions. While designers and other team members should be encouraged to observe moderated testing, maintaining ongoing conversations about findings and opportunities will engrain them into the design process.

On a recent project, our content strategist had an idea for a new site navigation and content model, but we didn’t have polished designs to test against this idea. That’s OK. In this type of situation, you can get creative with your testing methods—because the only goal of this phase is to get enough information to validate or improve any part of the idea. We did a tree test that helped foster conversations with customers about how they navigate to content they need today, and how their experience might be more or less efficient using a new content model.

Ultimately, you can find ways to see if an idea is the right one before taking significant time to design and develop it.

Don’t Forget About Post-Release Testing

Once you’ve gone through 3 to 4 rounds of research-informed design iteration, ideas should be pretty close to defined, and will soon move to development. After development, your team will need to identify another set of ideas to explore through research-informed design iteration. What are the inputs to generate those ideas? More testing! As you do rounds of post-release testing, and follow up on previous ideas, you might identify future opportunities to explore. Keep a list of those opportunities prioritized, and circle back to them when the time comes.

Alternatively, do some observational testing on features that have made it through the delivery pipeline. This effort shouldn’t be too different from the initial light discovery, and chances are, the prototype you previously tested as a design concept did not include real data or fully-built-out behaviors. This is the point where you will discover areas where people are using workarounds or repeatedly getting tripped up by interactions that do not behave as expected.

Get into a Cyclical Research Schedule

Try to get in a habit of keeping to a regular cadence of research—ideally, on an every-three-weeks basis. That way, the wider team will learn to expect and plan for sessions, which will only make the researcher’s job easier.

Here’s an example of what a design research cycle might look like:

- Week 1: Prepare the research plan, discussion guide, and materials. This is where designers and stakeholders can help provide questions and better-define the prep material.

- Week 2: If the research activities are unmoderated, like a survey or first click test, launch the test and check in on it regularly for participation. If they’re moderated, like an interview or observation, conduct the sessions and document field notes and weekly summaries. We like to post these in a Basecamp thread or other widely-accessible and searchable location for the entire team.

- Week 3: Prepare summaries and design recommendations. Keep these lo-fidelity, rather than in the form of a formal presentation, to encourage socialization of the findings among the team. However, having the documentation can provide a stable source of evidence if ideas are questioned later on in the project.

You’ll know how to define another round of research when you have new questions to answer or new people identified to answer them, or an increase in design fidelity (if there is a design at all).

Stay Focused on Your Goal

Your overarching goal should be to keep design and research teams closely engaged so that you are regularly getting answers to questions as design activities progress. This effort will help you be confident in knowing that you are designing and building the right thing, which ultimately pulls design efforts forward. You get something tangible to put in front of stakeholders and customers quickly, and you socialize research findings within product teams so that everyone always understands the “why” of what you are building.

You’ll know you’ve succeeded when everyone is beating to the same customer usability feedback drum. Team members across disciplines will start citing research findings in their day-to-day conversations, and, in a moonshot success, you’ll even start seeing findings embedded in Jira tickets.