At Think Company, we often start projects by creating a purpose statement to guide our work. The last phrase of the purpose statement reads, “we will know we are successful when…” We usually complete this phrase with metrics of user experience success—quantitative measurements ranging from ultimate goals like increased sales and stronger customer loyalty to increased tool or feature use. But how can teams actually work together to measure these things, with or without an analytics expert?

Oftentimes on UX teams, measurement and analytics seem outside of design’s purview. We design the solution and assume others will find us the answers to such questions. But this is not usually the case, nor should it be.

While designers do not need to be experts in analytics, we can be more involved in measuring our work by collaborating with analysts and developers who can implement analytics. As we make this connection, we need to be cognizant of the asynchronous timing of design and analytics implementation and departmental gaps or silos.

Below are some steps you can take to start regularly thinking about and measuring the impact of your team’s user experience work.

For those of you who are new to UX measurement, here’s a brief orientation to the terms we’ll use throughout this blog post. If you’re familiar with these terms, just skip down to the next section to jump in.

Measurement is a broad term we use to discuss tracking the user experience of a website or app over time based on agreed-upon metrics and goals. We track these metrics using analytics and other indicators like customer feedback surveys, reports, and more.

Analytics allow us to collect, measure, and interpret metrics that give insight into user behavior on a website, app, or digital product.

Metrics are specific countable measurements, like visits to a site or time spent on page.

Design with Achievable Analytics in Mind (Before It’s Too Late)

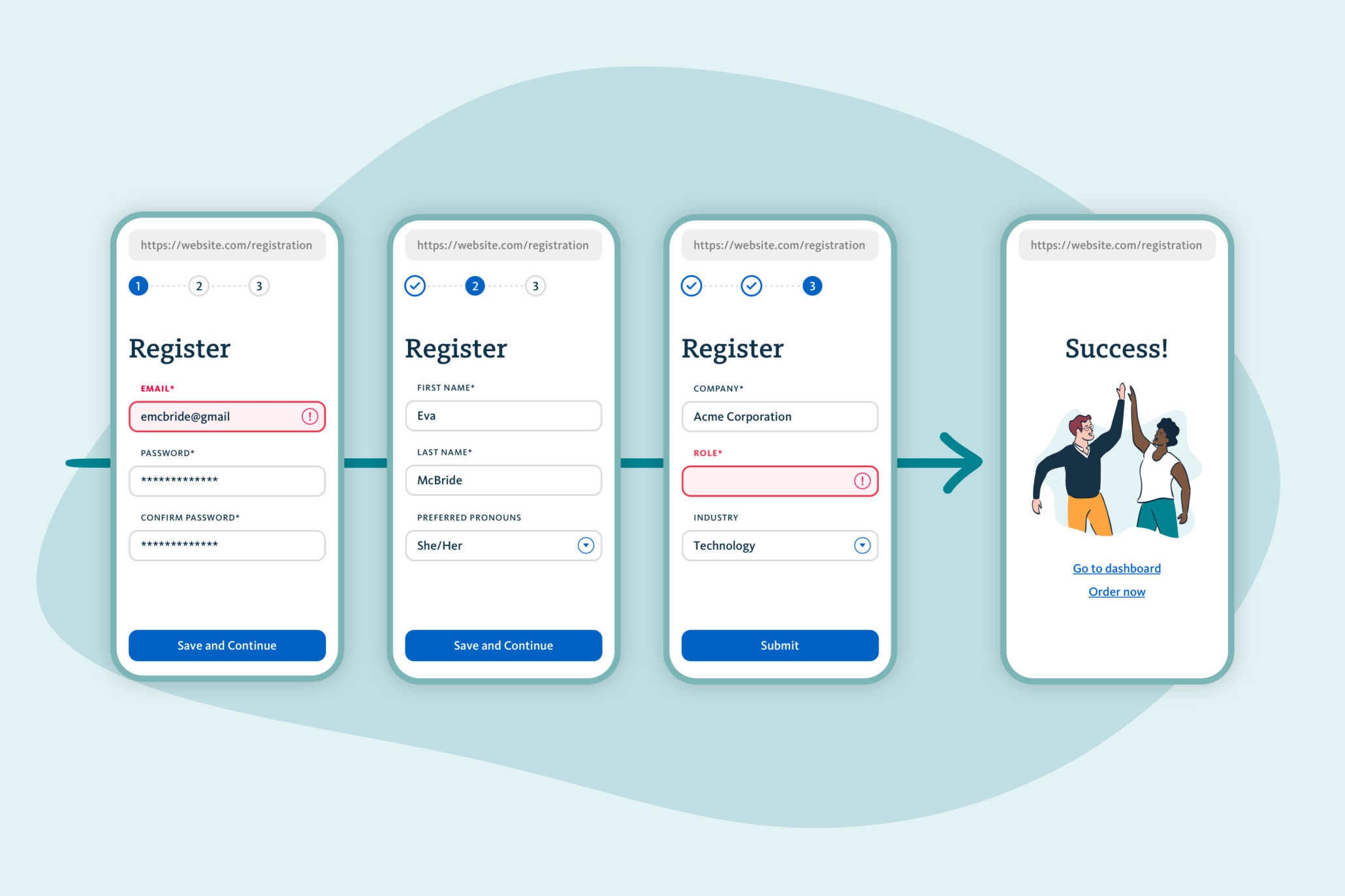

Imagine you are redesigning a registration form with a goal of increasing registration count by at least 10%. Similar to the example below, you’ve used a multi-page approach to help the process seem more manageable to the user. You’ve included proper error messaging to redirect the user after she’s encountered any issues. You’ve designed a delightful success screen directing the user to the most common tasks post-registration. The form is developed and launched, and users are seamlessly registering every day. Or are they?

You may be asking yourself the following questions:

- How do you know how many users have registered?

- Where do users abandon the form?

- How often do they encounter error messages?

- Which option do they choose on the success screen?

- How do you iterate without these answers?

- How do you know if this redesign has increased sales or strengthened customer loyalty?

- How do you know if something is broken?

- How do you know if this redesign has increased registration count? If it hasn’t, why not?

Asking these questions during the design process and before development even begins is crucial. Teams need time to generate requirements for what needs to be tracked or measured, as well as time to implement the measurement solution.

For example, in the case of our multi-page registration form above, we need to consider how it’s being built. If the form is built on a single page app, then you may need to add query strings to each URL. Traditional analytics platforms rely on a unique URL to track visitors at each step, so you will not be able to rely on traditional or “out-of-the-box” analytics as easily. Creating unique URLs at each step, like above, or determining an alternative solution, such as tracking interactions on each button, requires dedicated analytics and development work as well as a clear plan for what buttons and steps to track so that they can be measured effectively after launch.

In this scenario, there are a number of ways you could still measure the experience, but some ways are more scalable for your analysts to implement than others. By involving the development or analyst teams early, you can find the best compromise between the design, the build, and the measurement of the form experience in the long term.

When sharing work, designers should bring up the questions they’d like to be able to answer about the design and align with key stakeholders on these goals. If measurement is considered too late, teams risk losing the ability to track important metrics.

Identify Who Else in Your Organization Can Support UX Measurement

In addition to asking questions about your designs at the right time, it’s crucial to direct these questions to the right people. Potential measurement support might range from the team building your product or site to an individual analyst. If your team doesn’t have analysts or developers to help implement measurement to answer your questions, you can learn from what others in the organization are measuring.

For example, content teams use SEO metrics, marketing teams measure social media and display ad performance, and call centers collect feedback from customers. If you don’t have an analyst or developer who can help implement measurement to answer your questions about the registration form, you might be able to work with the content team to explore the rate of organic search traffic to the registration form, or work with the call center to determine how many customers call with issues related to registration.

When engaging with various teams, keep in mind that the understanding of the relationship of what’s being measured to the user experience can vary based on that department’s knowledge of the user’s full journey. Additionally, different teams may use different naming conventions for metrics, which can create inconsistency. Try to communicate clearly, avoid jargon, and share as much contextual information with these teams as possible.

Be on the Lookout for These Other Common Measurement Pitfalls

In addition to the right timing and right teammates, there are other common measurement pitfalls to watch out for:

- You assume you can measure retroactively. It’s common to take for granted that if you have some analytics in place, you can go back and analyze specific data later. Many metrics cannot measure anything prior to when they were set up, so they need to be in place at launch in order to deliver the full picture of your new experience. In general, metrics work best when they are planned out ahead of development.

- You expect to measure everything. There are an infinite number of ways you could measure the experience of your site or product, and it’s impossible to track everything. This means you need to prioritize which metrics will provide the most value for the work it takes to implement and measure them. Then, add metrics as needed over time.

- You take for granted that metrics will be intuitive. Any analytics setup beyond the most basic metrics will require some planning in order to ensure that the data can be analyzed in a useful way. This means you should make sure that you can categorize button or page interactions based on relevant experience or business goals. An analyst will struggle to build these metrics in a cohesive manner without clear direction from the team that will track these goals.

- You assume that existing analytics will work properly after launch. Let’s say you are in the fortunate position of having someone build a robust and reliable set of metrics for a current design. Any major redesign is likely to result in significant changes, such as buttons being removed or moved, changing over to a new CMS or platform, or any number of updates. All of these changes have the potential to break existing metrics. Make sure you plan for this before launching a redesign or pushing out new changes.

As designers and team leaders, you can work to ensure your designs are appropriately measured without needing to have an extensive analytics background. You can ask specific questions early on in the design process to the right teammates. In the absence of development or analytics resources, you can be flexible about using data from different teams. And you will know you are successful when you’re able to answer questions like, “have we reached our goal of increasing registration count by at least 10%?”